At two recent events attended by academics, entrepreneurs and business executives, I began my remarks with two simple questions: who feels we are living in a time of remarkable innovation in science and technology? And, who believes that the way we develop and grow has hit a wall? Both times, most hands came up. This odd contradiction is the defining feature of our days: we believe in innovation, but have given up on progress. Our real challenge is not the proverbial fight between man and machine recounted over and over in the popular press; it is the struggle against a technological discourse that discounts our ability to write a better version of the future, making us passive subjects in a world of volatility, uncertainty, complexity and ambiguity. To write a better history of the future, we must learn how to liberate our imagination from the grip of the present.

***

A great benefit of designing the world’s largest gathering of decision-makers is to receive plenty of “you must read this article” emails. I file most of them away for quieter hours but this one caught my eye: “What the military of the future will look like, from hypervelocity weapons to self-driving boats”; a catchy title followed by flashy images and little text; the formula for success in media’s battle for likes and shares.

But it was not the article’s scream for attention that made me pause; it was its narrow-minded way of dreaming about progress. Autonomous vehicles, humanoid robots, 3D printed organs – the most incredible ideas seem within reach of a generation. Reality feels like the beta-version of a science-fiction dream. But, with rising tensions from the Sahel zone to the South China Sea and brutal terrorist attacks in many capitals, what first jumps to mind is how technology can help us beat enemies faster, smarter and harder.

Why don’t we ask how all this ingenuity could help us find better ways than war to settle conflicts? The answer is that we lost sight of the picture because we got stuck in a frame in which protracted economic, societal, environmental and political crises are the “new normal”.

We are in the midst of a big technology hype, partly driven by real change, partly by a sensationalistic self-referential media machine. Technological change deserves the attention it gets, but how we dream of technologies reveals more about us than about tomorrow. To shape a better future, we must liberate our imagination from the grip of the present; we must step out of the frame.

When fears collide with ideas

We commonly associate dreaming with creativity; randomly fired neurons create images in our mind that are inaccessible to our waking senses. Sigmund Freud in his famous 1899 treatise, “The Interpretation of Dreams”, argued that the fountain of these images is want; “we dream about what we dream of”. Though still influential, this interpretation was later challenged by psychologists like Calvin Hall, who introduced the world of dreaming to the world of data. After studying more than 50.000 dreams, Hall found that most of them are not expressions of desire; they are reproductions of fears and prejudices in our resting minds. Evolutionary psychologists like Ernest Hartmann see in this a form of built-in nocturnal therapy; the mind replays scary experiences almost exactly as they happened to make them gradually less threatening.

The same holds true for how societies dream. New ideas don’t expand in a vacuum. They are passed on through existing configurations of social networks, get filtered and amplified, and combine and collide with fears and desires. The mechanics of this are messy and complex. Some find that nothing snowballs like fear; others argue that experiences of awe are most viral.

The common denominator of such findings is that strong emotional responses trigger our desire to communicate with others. We owe this to so-called mirror-neurons: when we see someone expressing an emotion, be it pain or joy, it activates similar regions in our brain as if we are experiencing the feeling ourselves. And, as we feel the same, we are more likely to believe we are, and thus pass on the emotion. While this mechanism is universal, not all dreams are equal. It is those of the best connected that are more likely to be multiplied, sometimes creating the impression that an idea is common when it is not. Social scientists call this “majority illusion”.

If dreaming were inconsequential, such hard-wired biases would not be an issue – but of course it isn’t; dreams can make us go out and spend, start businesses and build factories; but they can also put fear in our hearts, make us lock our doors and save our resources. Dreams can blind us from reality and be cover for political horror, but they can also inspire us to great achievements. “Longing on a large scale is what makes history,” writes novelist Don DeLillo.

We believe in innovation, but have given up on progress

Nowhere harder are ideas, fears and desires reacting than in how we dream of future technologies. At two recent speaking events, one mainly attended by academics and entrepreneurs, the other by business leaders, I asked: who feels that we are living in a time of remarkable innovation in science and technology? And, who believes that the way we develop and grow has hit a wall? Both times, most hands came up. To me this is a defining feature of our days: we feel like we live in an era of great promise, mostly thanks to staggering breakthroughs in science and technology; but, at the same time, there are insurmountable limits in the form of economic, political and environmental risks.

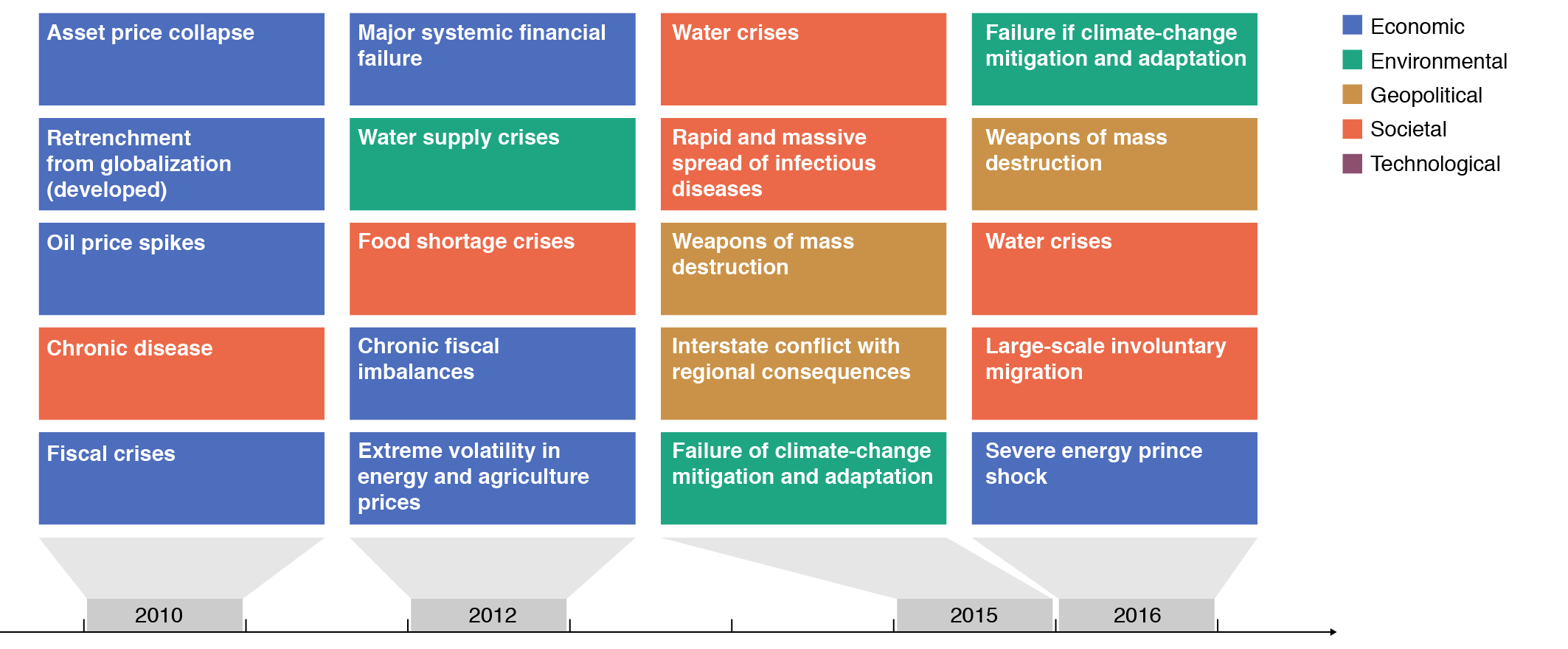

For more than a decade, the World Economic Forum has kept track of these limits in its Global Risk Report, which includes 750 expert perspectives on 29 risks over a 10-year period. In 2016, large-scale involuntary migration, extreme weather events, the failure to mitigate and adapt to climate change and interstate conflict were ranked as the highest risks. In previous editions, perceptions were geared more towards economic risks such as financial collapse (this could be interpreted as “thank God, we dealt with these”, another interpretation could be that even the most brilliant minds are not sheltered from cognitive biases).

What happens when fears meet fantastic machines? Shared visions of the future shift from expressions of desire to reproductions of mundane fears and prejudices (not so on a consumer scale, where the growing number of “female” robots – like this, this or this – represents the other end of the spectrum in a rather disturbing way). When fears meet fantastic machines, promise turns into peril, and dreams turn into nightmares.

Two nightmare narratives dominate the popular discourse: one is about exploitation with new means: we were betrayed by our banks, now we are getting beaten by our machines and then dismissed by their owners. This is the fear of today’s disadvantaged and disenfranchised; the left-behinds of contemporary capitalism. The other nightmare is about the erosion of the familiar, the unravelling of industries, maybe even the end of order as such. This is the fear of those who fared well with the status quo, including well-educated workers from financial services to healthcare industries whose workplaces are beginning to be invaded by intelligent machines. As different as they are, both narratives portray progress as a problem, as “disruption”.

In an odd way, we are content with the idea that disruption is the norm. Like Joseph K., the protagonist in Kafka’s Trial, we accept a world that we are powerless to control. We believe in innovation, but have given up on progress, and the possibility of moral and social improvement.

The real challenge is not the proverbial fight between man and machine, recounted myriad times since the Luddite era. On the one hand, it is the struggle against cynicism and apathy, the toxic by-products of trust that were squandered in the crises of our decade; on the other, it is the struggle with prophets who promise that technology will solve all problems. On both ends, it is the struggle with a technological discourse that discounts our ability to shape a better future; a discourse that makes us passive subjects in a world of volatility, uncertainty, complexity and ambiguity – the watchwords of this decade

The poetry of progress

Technology has always evoked hope and fear in society. Science fiction, by combining the rigour of science with the imagination of fiction, plays a big role in expressing these feelings. Novels like A Brave New World or 1984 have captivated readers for decades. Two ingredients make these stories so powerful. The first is great science, which in some cases led to surprising accuracy: Jules Verne imagined a propeller-driven aircraft in the early 19th century when balloons were the best that aviation had to offer. In the 1960s, Arthur C. Clarke envisioned the iPad and Ray Bradbury the Mars landing. It is probably just a matter of time until “Samantha”, the AI voice in Spike Jonze’s film Her, will be real.

The second ingredient is a keen understanding of our fears and desires. This is what makes these films great objects of study for dissecting the sentiments of an era. The two most successful sci-fi blockbusters ever, George Lucas’ Star Wars and Gene Roddenberry’s Star Trek, offer a great example of how popular culture combined perceptions of technological progress with contemporary hopes and fears.

The first episode of Star Trek came out in 1966. In the first two and a half decades after the Second World War, sometimes referred to as the “golden quarter”, growth in Europe and the United States exploded, Germany experienced its Wirtschaftswunder, and Japan became an industrial powerhouse. The fruits of the so-called Second Industrial Revolution – electricity and the internal combustion engine (but also chemicals as the video shows) – produced ever new technological wonders. The Concorde may have been the most iconic. For most of modern history, the top speed of passenger travel had been 25 mph. By 1900, some brave souls accelerated their vehicles to 100 mph. When the Concorde took off in 1969, it accelerated to 1,400 mph. The developed world seized progress, unleashing far-reaching changes not only in technology, but also in politics, society and culture; the UN came into being, millions of colonized peoples in Asia, Africa and the Middle East won political independence and the civil rights movement was born.

The Star Trek universe was not only a world of wondrous technologies, it also was a world of high-minded idealism, absent of social classes and divisions of race, gender or ethnicity, a planetary federation governed by the rule of law, a “kinder, gentler Soviet Union … that actually worked,” as David Graeber puts it. Infused with the enthusiasm of its era, Star Trek embodied a deep belief in technology as an engine for societal progress. Yes, the quest for progress involves significant risks, but the potential for knowledge and advancement are more significant. As Captain James T. Kirk enthusiastically shouted out in the second Star Trek season: “Risk! Risk is our business. That’s what this starship is all about!”

Big progress inspired even bigger dreams. Force fields and warp drives became common expressions, and reputable magazines assured readers that the future would bring colonies on Mars and matter-transforming devices. But the fervour did not last. On 15 August 1971, US President Nixon suspended the convertibility of the dollar into gold; the post-war Bretton Woods system collapsed. Shortly thereafter, the world economy was rattled by an oil crisis that further depressed growth and employment. High inflation and low employment demolished trust in markets and the ability of governments to correct them.

Moreover, after two decades of breakneck growth, the pressure on natural resources from human activities began to show. One year after the Nixon Shock, the Club of Rome published its famous Limits to Growth report that warned of catastrophic consequences unless leaders radically cut the use of resources; the environmental movement started to form. Suddenly, technology was part of the problem, straining the limits of the planet and the mind. Alvin Toffler’s 1970 bestseller Future Shock hit a nerve when it stated that our brains are ill-prepared for such pace of change.

Suddenly progress turns into disruption

These developments were the backdrop for the first Star Wars film. When it came out in 1977, it immediately became a box office hit and the highest-grossing film of all time. Comparing the visual language of both science-fiction blockbusters is revealing: the flashy colours of the Star Trek fleet turn into the dark colours of Darth Vader; peaceful coexistence turns into civil war; dreams turn into nightmares. While Star Trek continued to attract a large following, a new generation of science-fiction turned space from a source of wonder and adventure into a canvas for our worst nightmares. In 1979, Alien finally made space the place where “no one can hear you scream.”

The Nixon years have more in common with today than half a century suggests. The threats we face, from climate change to overpopulation, resemble dangers the Club of Rome highlighted in 1972. Now as then, economic uncertainties abound, as do questions about the commitment and capability of states to fix them: trust in markets is down since the 2008 “Lehman Shock” that triggered the global economic crisis; trust in governments is battered by rising inequality and a perception of tense security in many countries; technology, celebrated in the 1990s as the engine behind a new “productivity miracle” and “hyper-globalization”, is blamed for job polarization and environmental decay.

In the heady globalization years, technological progress made the world more flat and egalitarian; now it makes it more spiky, dirty and risky – and Star Wars is again the highest grossing film ever.

Most stories of tomorrow are echo-chambers of today

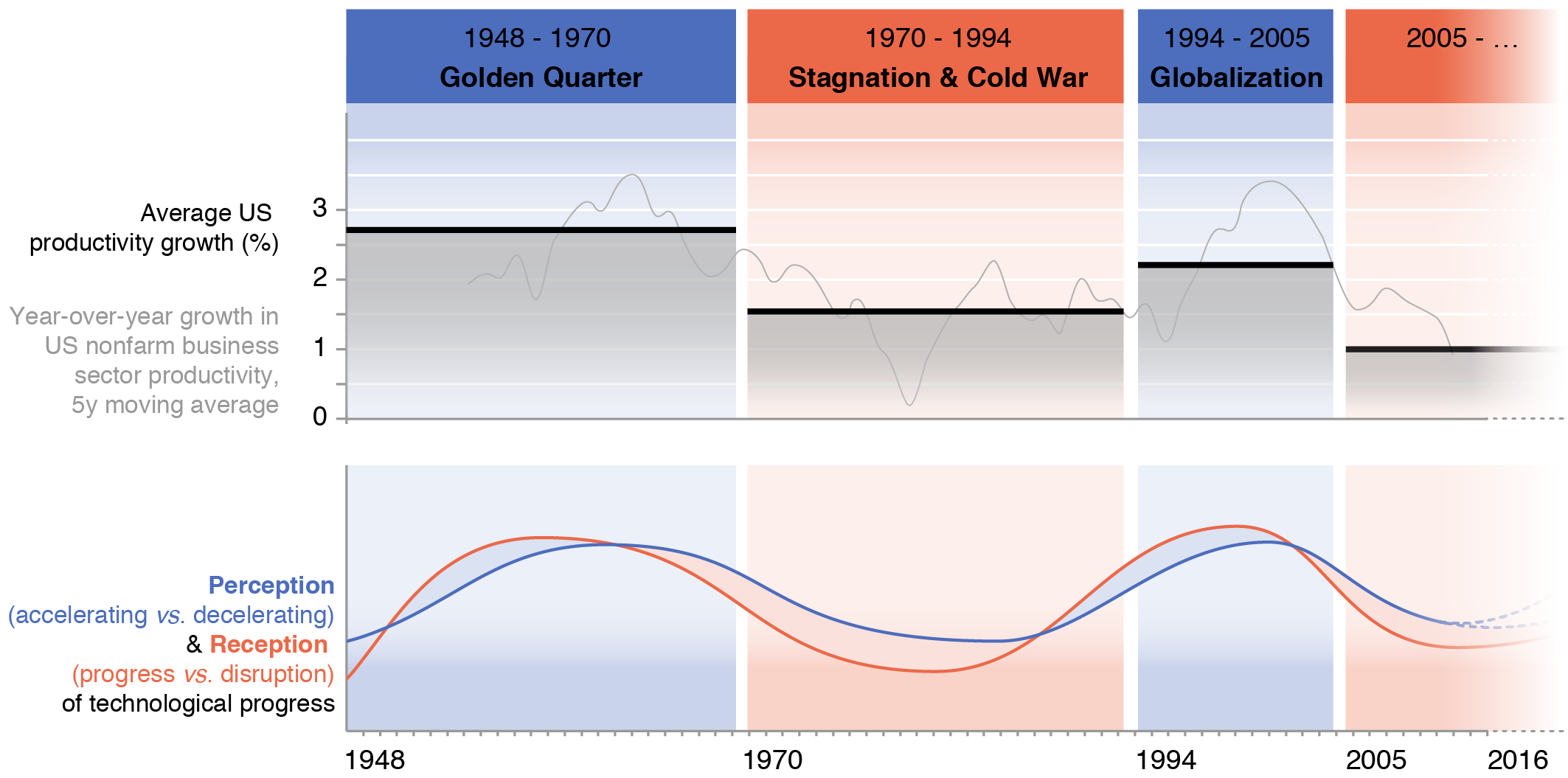

Most stories of tomorrow are echo-chambers of today, revealing more about us than about the future. The graph below illustrates this thought. The upper section shows average productivity growth in the US over four discrete periods. Productivity is an imperfect yet still useful measure of how a society is evolving for it defines how rapidly an economy can grow without rampant inflation. The lower section tries to visualize on two levels how society is dreaming about progress: the blue line represents our perception of technological change as accelerating or decelerating. The orange line represents the predominant reception of technological change as an opportunity or threat.

Both the perception (is it actually happening?) and the reception (is this good or bad?) of technological change are strongly correlated with productivity growth, in part because technology is a key factor behind it. Yet, with major innovations known to show up only after some time in productivity statistics, major shifts in economic climate are normally attributed to other factors first. Hence, such shifts first change how technological change is received before changing the way it is perceived.

In the post-war decades, productivity grew on average at 2.7%, which means living standards doubled over that time. Technological change was seen as an enabler of societal progress and, along with new economic and societal freedoms, the dreams of future technological possibilities – from flying cars to colonies on Mars – grew bigger and bigger.

With the Nixon Shock, things changed. Average productivity growth dropped to 1.5% from 1970 until 1994, which meant the time needed for living standards to double increased from 25 to 45 years. This first shifted the reception of technology from being greeted as a force for good to being feared as a potential threat. As the malaise continued, the perception of technology as an accelerating force in human evolution dropped, too. “Progress” was replaced by “innovation”, a smaller and more-neutral term with no in-built expectations of moral and social improvement.

It took another shock, the collapse of the Soviet Union and the end of the Cold War, to again invert the interplay of reception and perception. In the hyper-globalization era of the 1990s and 2000s, average growth almost recovered to post-war heights. The fruits of the digital revolution began to show and technology was seen again as a force for good, spreading the economic virtues of capitalism and the political values of the West across the planet. But then the 2007-2009 financial crisis collapsed the economic pillar of this dream; the ascent of China as a global power with little appetite for Western values folded the political one soon after.

While this story is a vast simplification of modern economic history, it helps to make sense of the mainstream public discourse on technological progress. Average productivity growth since the global economic crisis is down to just over 1%, lower than after the Nixon Shock. As a result, both reception and perception of technological change are being reconstructed in popular discourse; it is a process to which we must pay attention, not as science-fiction enthusiasts, but as citizens and leaders.

Reconstructing our history of the future

Seven years into the global economic recovery, the reconstruction of our technology narrative is in full swing: do we see a weak recovery because innovation has slowed down? Those supporting the motion point to historical productivity figures that show exceptional growth rates in the post-war decades, attributed to the Second Industrial Revolution, and a short outburst in the 1990s attributed to the “Digital” Revolution.

Both effects, they claim, are now worn out. A study of US patent data lends some support to this idea. It found that, since 1970, the prevalence of “broad” innovations – those combining radically different technology categories – dropped from 70% to 50%. Recent major works in economics that predict a longer stagnation period, from Piketty’s Capitalism in the 21st Century to Gordon’s Rise and Fall of American Growth, all rest on this assumption.

On the other side of the debate, experts and entrepreneurs retort that such an analysis gravely underestimates the disruptive implications of the digital revolution. Author and futurist Ray Kurzweil has argued since the 1990s that technological progress in areas such as nanotech, artificial intelligence and biotech will increase exponentially until a “rupture in the fabric of human history occurs.” In 2014, The Second Machine Age by Erik Brynjolfsson and Andrew McAfee further popularized the idea of a new technological era fuelled by the recombinant powers of true artificial intelligence and ubiquitous connectivity. This idea finally hit the mainstream when the World Economic Forum introduced the vision of a Fourth Industrial Revolution in a book of the same title by its Founder and Chairman Klaus Schwab. The concept also became the theme of the Forum’s 43rd Annual Meeting in January 2016.

While it is nearly impossible to predict the path of technological progress, there are signs that humanity has taken big hurdles. One is the victory of Google’s AlphaGo over the current world champion of the Asian strategy game of “Go”. More complex than chess, the number of possible outcomes in this game exceeds the number of atoms in the universe. This is why massive computation power is not sufficient. Intuition and creativity, so far considered genuinely human capabilities, are crucial to win. AlphaGo is a system which, instead of just calculating large numbers of moves, can recognize patterns from thousands of old games much like neurons do in the brain. What’s really different (and kind of scary) about AlphaGo is not that it won, but that we cannot exactly say how, just as much of our own knowledge sits in the brain without us being able to articulate it. It is this evolutionary step that thrills computer scientists so much, and which shocks so many people across Asia.

Progress in AI is just one example. At the intersection of the digital and physical domains, electronic platforms are reducing transaction costs dramatically, augmented-reality goggles are maturing, and billions of connected assets are transforming business models and customer experiences. Across the digital and biological domains, progress in genetic sequencing is moving synthetic biology and precision medicine closer to reality. At the juncture of the physical and the biological world, new materials and machine-computer interfaces are making Kurzweil’s vision of transhumanism seem less eccentric.

The path of progress remains full of challenges, more than hypesters are ready to admit; in AI, material limits to shrinking silicon chips and energy limits to computation power remain unresolved: simulating just one brain with current technological means is estimated to require the capacity of China’s Three Gorges Dam, the world’s largest power station. Nevertheless, the “new machine age” narrative, at least for now, seems to be winning points in the ongoing battle of ideas.

The real risk is neither pessimism nor optimism but fetishism

Technological change is top of mind for leaders, be they CEOs, heads of civil-society organizations or government leaders. Yet, the unbreakable optimism of Silicon Valley aside, with low growth and rising tensions, anxiety often beats anticipation. Business leaders tirelessly warn of being “disrupted” by technology, economists from David Autor to Laura Tyson warn of job polarization and inequality, historians like Francis Fukuyama and Yuval Harari fear that human enhancement could jeopardize social coherence, or as bioethicist Leon Kass put it: “human nature itself lays on the operating table.”

But the real issue is neither excessive pessimism nor optimism; it is fetishism, the lopsided idea that we are denied of writing our own history. Technologies do not evolve in isolation; as much as they are products of science and engineering, they are products of values and institutions. To be intelligent, technology visions must be humanist. As obvious as that sounds, it can’t be repeated enough. Too often this message gets lost in the hype created by technology fetishists on both ends of the spectrum. The risks are significant: those preaching salvation risk disillusionment at best and delusion at worst; those preaching demise risk suffocating innovation and entrepreneurship.

The digital era is rife with thwarted aspirations that have triggered big shifts in how technology is received:

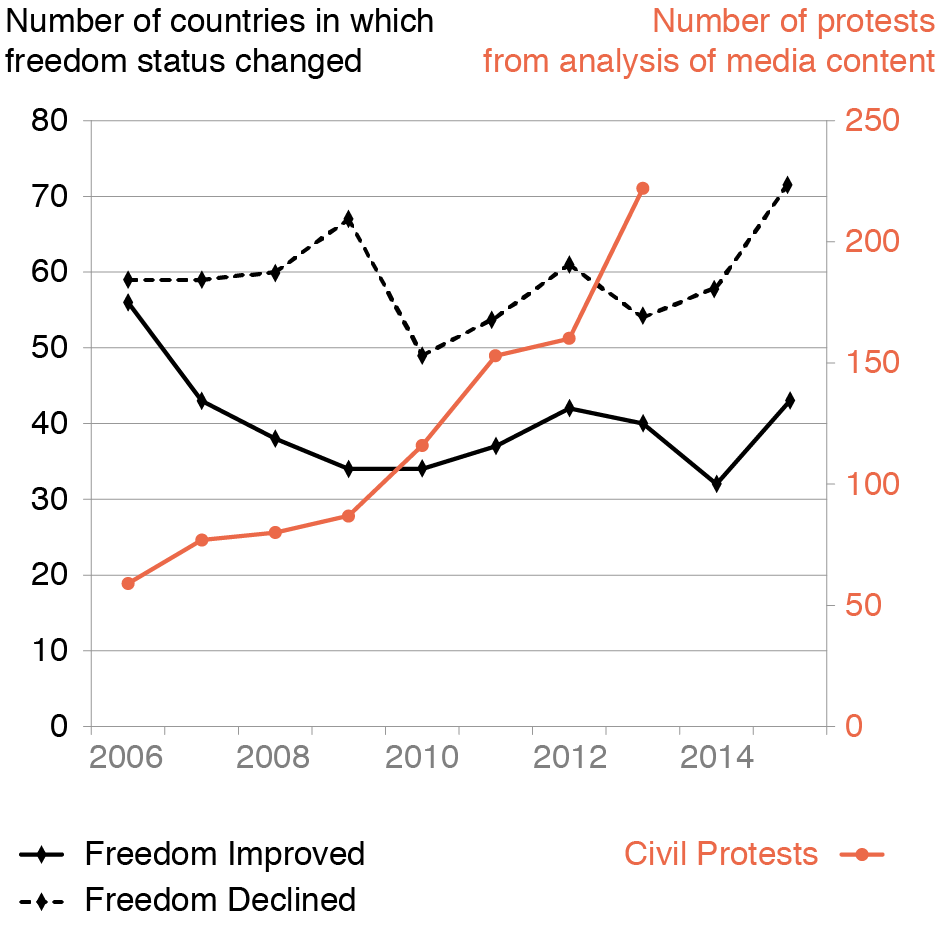

(1) The aspiration of connectivity fostering democracy. It looked like it for a while, but then the Arab Spring failed in most countries; the “99%” movement disappeared as fast as it appeared; and Ed Snowden revealed that not only individuals were being “upgraded”, but also traditional institutions. Disillusionment was the result; terms like slacktivism and clicktivism entered our vocabulary. Yet, the lesson should be a different one: there is nothing in technology that inevitably makes us more or less free. Many societies where connectivity helped citizens rise up against autocrats lacked the necessary structures to re-establish order.

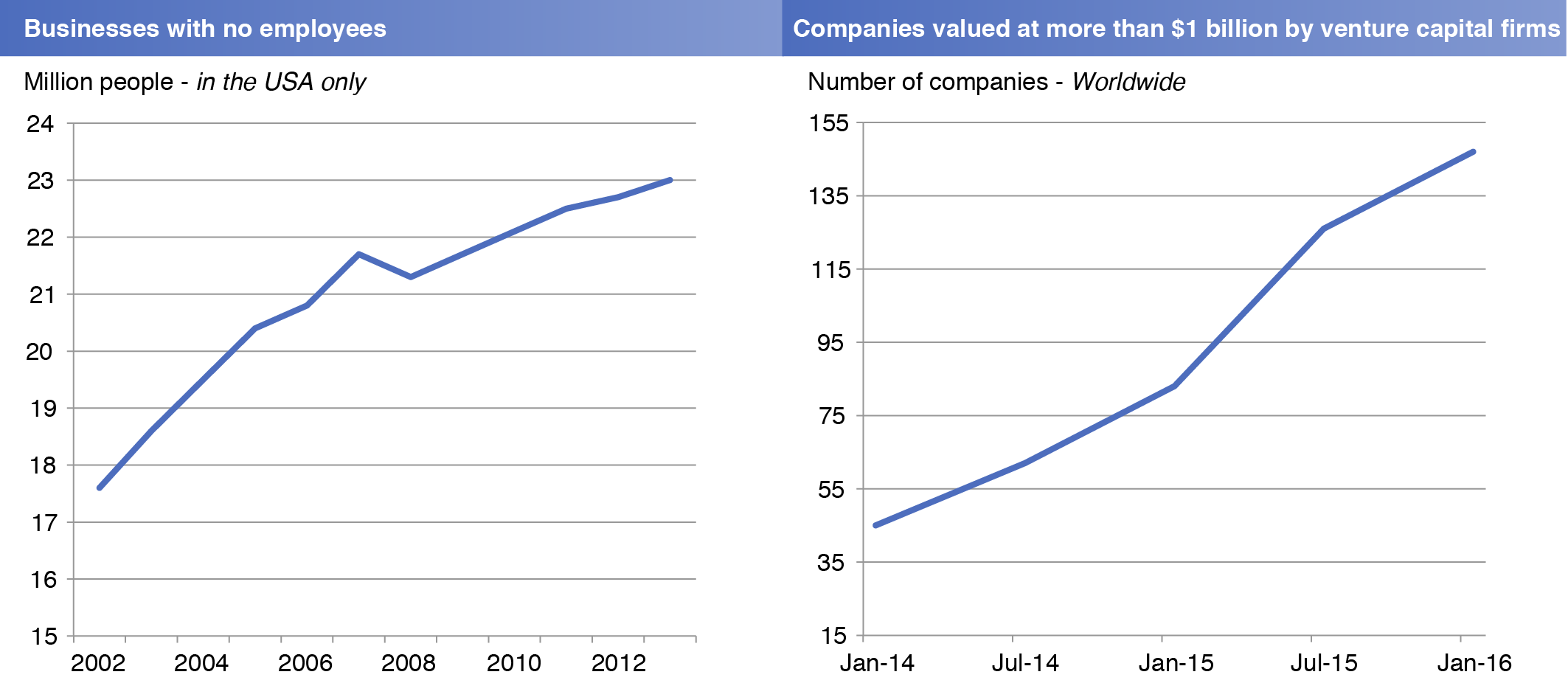

(2) The aspiration of a technology-driven start-up boom. No doubt, geeks and entrepreneurs are the new rock stars but, according to the US Census Bureau, the number of start-ups as a share of all companies has been declining for 30 years. While it would be wrong to proclaim the end of American entrepreneurship, this statistic puts the hype around app moguls and teenage billionaires into perspective. Moreover, start-ups like Uber that are valued at $1 billion or more are growing in numbers, but so are Uber-driver type “businesses without employees”. This raises questions around worker benefits and social protection. Disillusionment over the brave new world of work is a natural reaction, but the deeper lesson should be a different one: there is nothing in technology that makes us more or less entrepreneurial; and nothing that inevitably makes or breaks the market power of large technology firms.

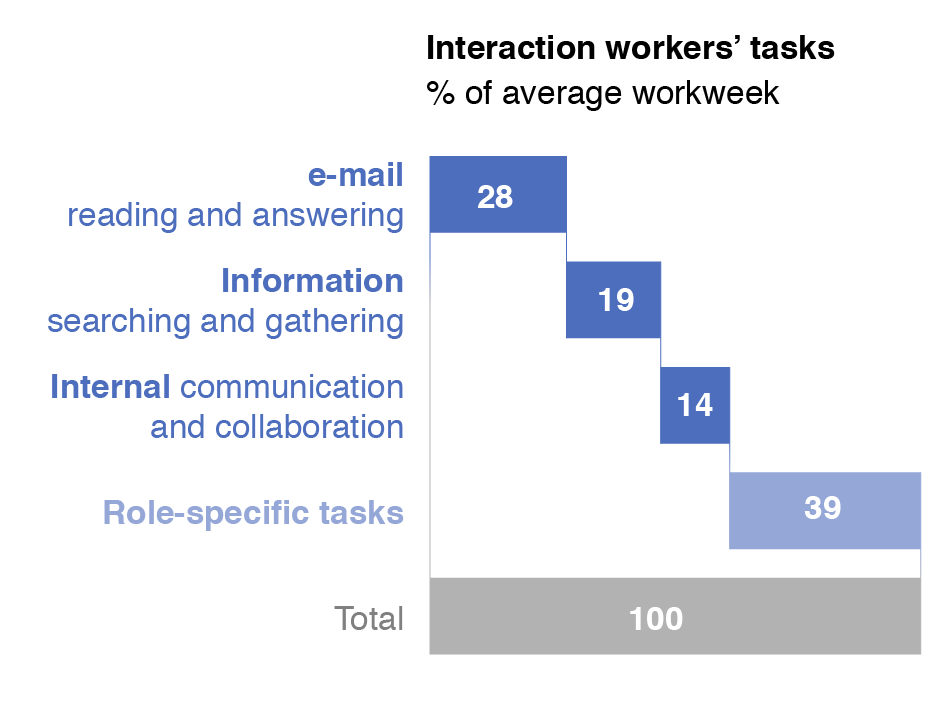

(3) The aspiration of liberating our creative potential.Carrying a supercomputer in our pockets offers us new ways to be creative and express ourselves. But, according to a 2012 McKinsey study, the average knowledge worker today spends more than 60% of the work week with email and searching the internet. “The ability to perform deep work is becoming increasingly rare at exactly the same time it is becoming increasingly valuable in our economy,” wrote Cal Newport in his recent book, Deep Work. Just as industrial automation in the 18th and 19th centuries made most people full-time industrial workers, so is the digital revolution turning us into part or full-time administrators. Does tech make us stupid? No, but we should come to terms with the fact that there simply is nothing in digital technology that inevitably makes us more or less creative.

Stating that technology has no agency does not mean it is “neutral”. On the contrary, technology is deeply embedded in society, constituting opportunities and limits. It is embedded in the material world, from how we build homes to where we build roads and pipes; it is embedded in our values, from how we maintain relationships to ideas like “privacy”; it is embedded in our language, making us “google” instead of search and “whatsapp” instead of send.

Technology is not neutral, but we need to avoid the post hoc fallacy of conflating what technology does with why it does it and how we got there. Mixing these aspects is what leads to fetishized dialogues that blame “the internet” for X and “mobile phones” for Y. Unpacking the power we attribute to technology is the starting-point for a debate on how technology could serve us better.

In fetishized technology dreams, the present contains the future, and the future explains the present. This is why they often fail and disappoint. Technology visions must neither assume that wars and injustice simply persist, nor that they will magically go away. They must not be closed stories, but open narratives in which the resolution ultimately hinges on us, and in which we are the heroes.

Liberating our imagination

How can we liberate our dreams of a technological future from our biases? How can we create an emancipatory movement that humanizes progress? How can we reach beyond real and imagined limits while fostering a debate about the feasibility and desirability of the possible futures science and technology could hold? These have become central questions for the World Economic Forum and the team that I lead. I shall leave a detailed discussion of these questions to the second part of this essay, but the path to an answer begins with two simple words: “what if?” This is “question zero” for much of the work we do. There is something incredibly powerful in connecting minds and hearts with different places in the past, present and future.

What if information could be downloaded from your brain? What if algorithms were to replace soldiers and generals? What if we could live for 150 years, or forever? Such questions are deliberately simplified, provocative and fictional. We want to encourage decision-makers to suspend their disbelief, explore alternative futures and open up spaces for debate and discussion about preferable futures.

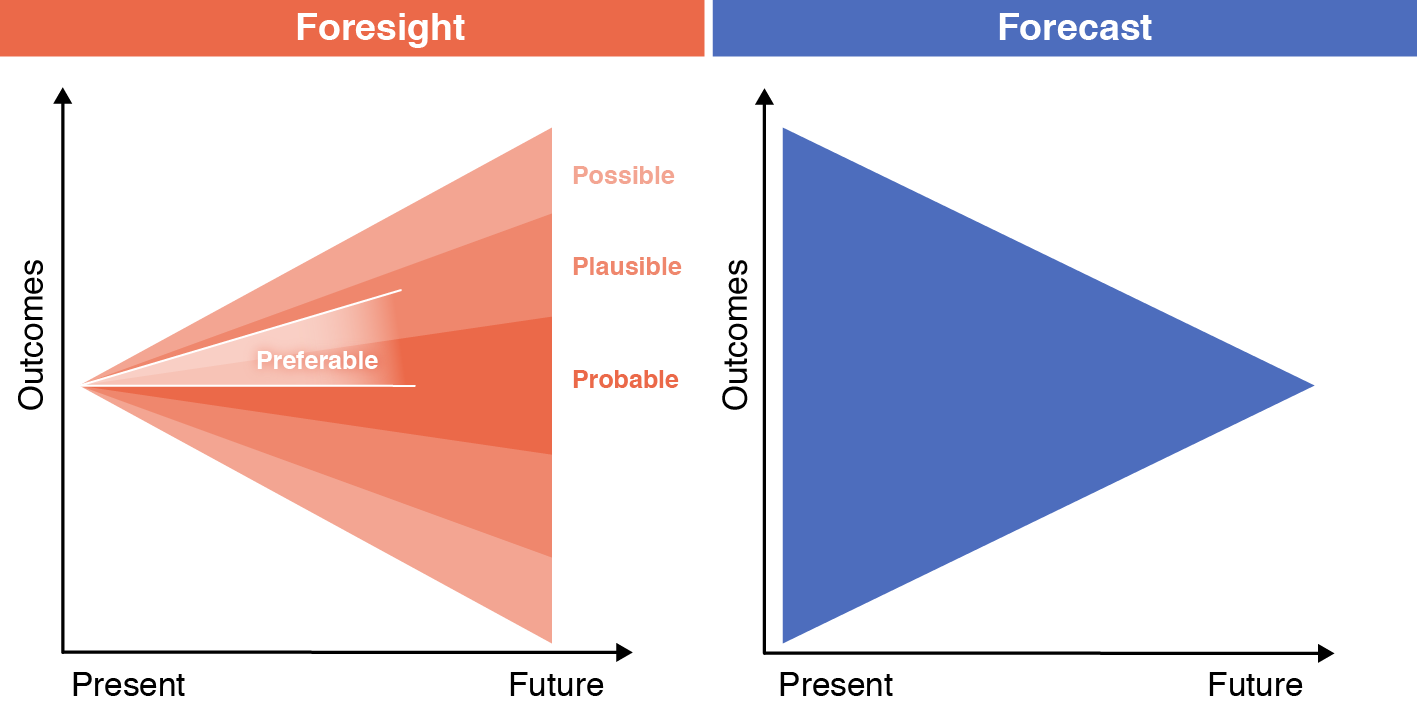

In Speculative Everything, Anthony Dunne and Fiona Raby illustrate the idea with a cone-shaped graph that distinguishes probable, plausible and potential events. The inner cone includes what is likely to happen unless there is some extreme event; the second is the space of scenario-planning and foresight, made up of what could happen when key drivers alternate; the third is about the possible outcomes that are theoretically and practically feasible. The probable is the realm of the weather man or woman; it is about prediction, simplifying and narrowing down a future that is already given. Most of the time, however, reality already narrows our ideas and perceptions of the future. Hence, much of our work is about helping leaders consider alternative futures in the realm of the plausible and possible.

This is hard work. Our brains are hardwired not to suspend disbelief. Not insecurity and hesitation, but overconfidence is the most common reason for failure. This is why my team and I have turned to new ways of making alternative futures more tangible. Learning from and collaborating with speculative designers like Dunne and Raby, as well as filmmakers like Lynette Wallworth and other acclaimed artists, we create new worlds – virtual and physical – that surprise and provoke, touch and sometimes disturb.

In a co-curated exhibition with London’s Victoria and Albert Museum, for instance, a functional 3D printed gun lent urgency to the question: what if everyone could download and print their own weapon?; a silicone mask created through a process called phenotyping triggered the question: what if everyone could be copied from their DNA?; and a solar-cell augmented dress asked: what if your body collected all the energy you need? All of these questions are deliberately simplified and provocative, but also rooted in real science.

The goal is never to predict, but to encourage decision-makers to step out of the frame and imagine that things could be radically different. We need more of this thinking to find opportunities in a new era of limits.

In that regard, comparing the Nixon and the Lehman Shock years also offers some encouragement: 1977 was not only the year Star Wars became a box office hit, but also the year when Steve Jobs and Steve Wozniak presented the Apple II, one of the first mass-produced commercially viable microcomputers. It was the beginning of the digital era. Where some see limits, others see opportunities.

Technology won’t determine our future; we will.

Featured Image: Prototype space shuttle Enterprise named after the fictional starship with Star Trek television cast members and creator Gene Roddenberry, 17 September 1976.